How Software Resilience Protects Critical Systems

At SKM Group, we see software resilience as the living backbone of modern digital ecosystems. It is the capability of your systems to absorb shocks, maintain essential functions, and recover faster than your business stakeholders expect. While many organizations still treat resilience as a “nice to have,” you already know it is now a survival metric. A resilient platform doesn’t merely avoid failure—it anticipates disruption and shapes its response.

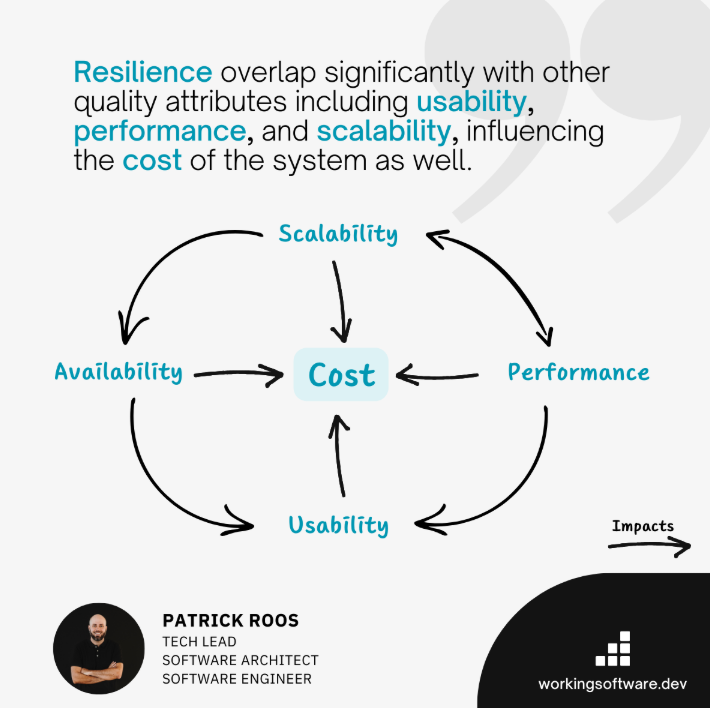

Although the terms often appear in the same conversation, resilience and reliability serve different missions. Software reliability is defined with respect to a system’s ability to perform its intended function under specific conditions for a designated period. In other words, reliability is probability. Resilience, on the other hand, is adaptability.

A reliable system avoids failure; a resilient system constrains failure, isolates it, and heals on its own. Reliability asks, “How likely am I to fail?” Resilience asks, “How quickly can I recover and protect the user?” When we guide you through resilience planning at SKM Group, we focus on both dimensions—your baseline reliability and your rebound capability—so your digital operations stay predictable even in unpredictable environments.

Across the industry, the insights behind ncc group software resilience research have set a benchmark for understanding complex failure modes in distributed environments. As a resilience software company, NCC Group’s body of work has shaped how decision-makers think about systemic risk. Their analyses highlight the chain reactions inside cloud infrastructure, the importance of root-cause-focused engineering, and the growing role of automation in preventing cascading outages.

For you as a leader planning long-term transformation, these findings reinforce a key reality: resilience requires continuous investment. Threat landscapes evolve. Traffic patterns shift. Architectural assumptions decay over time. NCC Group’s research proves that resilience is not static—it’s iterative. And at SKM Group, we use these insights to help you design systems that mature with your business rather than decay under pressure.

If resilience defines recovery, software reliability metrics help you measure exposure. These metrics—from MTTR to error budgets—become the compass points for your resilience strategy. They let you see where failures cluster, how faults propagate, and when reliability deterioration pushes you toward unacceptable business risk.

Metrics act as the connective tissue between engineering and leadership. They give you a financial lens on technical fragility, letting you evaluate which resilience investments create the highest ROI. For example, by quantifying failure frequency, you can decide whether redundancy, refactoring, or architectural changes offer the most effective protection for your critical systems. Without metrics, resilience becomes subjective; with them, it becomes a roadmap.

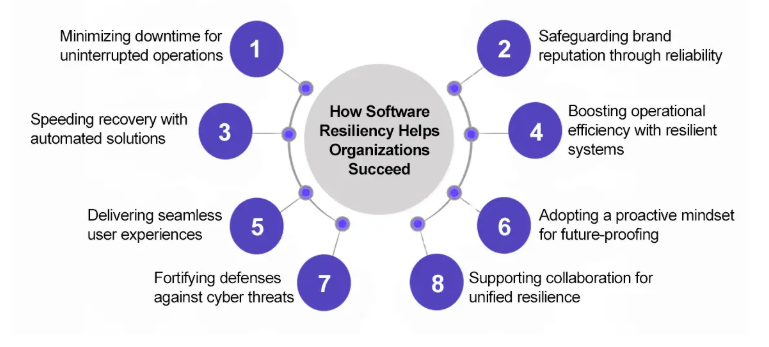

Executives often ask us: “What does resilience translate to in real numbers?” The answer is straightforward. When your application resists breakdowns, customer trust stabilizes, revenue flows uninterrupted, and operational stress drops. A resilient platform frees your teams to innovate, not firefight. It also lowers long-term maintenance costs, because resilient architectures reduce crisis-driven rewrites.

Only three moments truly define resilience for your business:

If your application remains steady through these moments, resilience becomes a competitive differentiator. It becomes the promise you make to your clients: “You can depend on us—even when conditions are harsh.” This is precisely the value SKM Group helps you deliver.

Make technology your competitive advantage with expert IT services.

Measuring software reliability metrics gives you visibility into weaknesses long before they materialize as operational failures. Instead of reacting to outages, you build a feedback loop that predicts where your system is likely to fracture. These metrics capture signals hidden beneath normal operations—performance drift, fault frequency, interface unpredictability—and turn them into actionable intelligence.

You measure reliability not to prove stability but to discover fragility. You measure it to understand the cost of inaction and the risk of delay. When we embed reliability measurement into your pipelines, you gain a living dashboard of your system’s health. You start reducing mean time to failure by refining code quality, improving error handling, and rewriting brittle components that once silently accumulated technical debt; you also sharpen your decision-making around infrastructure budgets. Over time, metrics reshape team culture—shifting attention from patching symptoms to engineering durability.

You adopt software reliability standards when complexity outpaces your informal processes. As your system grows—more users, more data, more integrations—the margin for error shrinks. Standards give you a structured playbook for maintaining consistency, preventing regression, and aligning engineering practices with business risk.

Teams often implement these standards after a major outage reveals gaps in design, testing, or recovery. But the most forward-thinking leaders adopt them earlier, before incidents define the agenda. Standards such as IEC 61508, ISO/IEC 25010, or the emerging resilience frameworks help you validate architectural decisions, formalize expectations, and streamline audits. They also eliminate ambiguity, ensuring your teams follow the same definitions, thresholds, and protocols—even as staff changes or infrastructure evolves.

Adopting standards sends a clear signal inside your organization: resilience is not optional. And at SKM Group, we help you tailor these standards so they reinforce—not restrict—your innovation velocity.

Modern software reliability tools act as your early-warning system. They collect real-time performance indicators, detect anomalies, model failure scenarios, and test your system’s behavior under stress. Tools such as Gremlin, Chaos Mesh, Dynatrace, New Relic, and specialized reliability simulators allow you to see how your platform behaves under adverse conditions. Others support static analysis, SRE monitoring, anomaly detection, and predictive modeling.

The power of these platforms lies not in the dashboards but in the insight they generate. They translate mountains of raw data into clear patterns: performance drift, error spikes, resource saturation, or dependency instability. When we guide you in selecting the right toolset, we focus on proportionality—you don’t need every capability on the market, only the ones that align with your architecture, scale, and regulatory context.

Integrating Tools into CI/CD for Continuous Monitoring

When reliability tooling becomes part of your CI/CD pipeline, stability becomes a continuous discipline—not an occasional checkpoint. Integrating these tools directly into your build, test, and deployment workflows ensures that weak code paths, performance regressions, and misconfigurations are caught before they reach production.

At SKM Group, we often connect reliability tools to your automated test suites, performance baselines, and error budget policies. This way, every deployment validates not only functionality but resilience. Your CI/CD pipeline becomes a living gatekeeper that protects your uptime, enforces engineering standards, and maintains system integrity even under rapid release cycles.

Automating Failure Prediction with Reliability Software

The next frontier of resilience is prediction. With machine-learning-driven reliability engines, you can forecast where faults are likely to occur based on historical patterns, dependency correlation, and anomaly trends. Predictive tools continuously scan for deviations from normal behavior and surface signals before they escalate. This proactive layer reduces your mean time to recovery and gives your team the advantage of early intervention.

Prediction turns resilience from reactive firefighting into preventative architecture. Instead of planning rollback strategies under pressure, you adjust capacity, refine configurations, or fix bugs long before the user feels the impact. At SKM Group, we see predictive analytics as a force multiplier for your operational stability.

Gain flexibility and save valuable resources using IT outsourcing.

Applying Software Reliability Techniques via Toolchains

Your toolchain becomes the operational engine for software reliability techniques. It helps you apply fault injection, stress testing, chaos experiments, dependency simulation, redundancy validation, and recovery tests. Every tool contributes a focused view of your system—latency tolerance, concurrency handling, resource resilience, or failover performance.

By running reliability techniques through automation, you scale your resilience maturity without expanding manual workload. You test more scenarios, identify more edge cases, and validate more assumptions than would ever be possible through conventional QA alone. This tool-driven approach accelerates your resilience learning cycle and anchors it in measurable outcomes.

Interpreting Tool-Generated Metrics for Stakeholders

Metrics matter only when stakeholders understand them. Your executives don’t need to see logs, traces, or stack dumps—they need clarity. They need to understand what a metric means for revenue, risk, and service quality. That is why interpretation is as important as measurement.

At SKM Group, we translate metrics into narrative. We help you explain how a spike in error rates affects customer churn, why MTTR reduction changes your SLAs, or how latency anomalies signal infrastructure instability. You empower decision-makers not with noise but with insight, enabling them to allocate budgets where they matter most and approve architectural changes with confidence.

Uptime is the currency of digital trust, and software reliability techniques form the operational engine behind it. These techniques help you detect failure patterns, isolate faults, reduce downtime, and maintain predictable performance. At SKM Group, we emphasize a layered approach—techniques that reinforce each other rather than operate alone.

You improve uptime by focusing on three qualities: consistency, adaptability, and observability. Consistency ensures your system behaves the same way under varied loads. Adaptability allows it to shift resources and degrade gracefully when pressure rises. Observability ensures you see issues as soon as they emerge. Techniques such as fault injection, load testing, latency modeling, and circuit breaking bring these qualities into sharp focus. When applied together, they give your system the structural strength it needs to protect user experience during operational friction.

These practices also help you understand your own limits. When you know how your system fails, you can design it to fail well. You lower the blast radius of incidents, secure critical dependencies, and build confidence into your operational posture. The result is a platform that behaves like a resilient organism—not a fragile machine.

A resilience software company brings a specific form of operational intelligence to your organization. It understands that resilience is not only about architecture but also about process, culture, and continuous evolution. Firms working in the resilience space—such as NCC Group, whose insights shape the broader industry—focus on pinpointing systemic weaknesses and designing measures that eliminate or neutralize them.

At SKM Group, we use a similar philosophy. We help you evaluate bottlenecks, outdated dependencies, inconsistent failover paths, and parts of the system that amplify risk. This expertise extends beyond technical diagnostics. It includes strategic guidance, scenario planning, readiness assessments, and leadership-level communication. You gain a partner who views resilience not as a product but as a journey—one with defined milestones, measurable outcomes, and clear expectations.

A resilience partner also operates as an external pressure test for your assumptions. It ensures your architecture can meet the realities of scaling, regulatory shifts, global deployment, and changing attack surfaces. In practice, this means designing for durability, validating for predictability, and improving for longevity.

Designing for Fault Tolerance and Graceful Degradation

Fault tolerance starts at the design phase. When you intentionally plan for failure, your system becomes harder to break. You identify critical paths, dependency chains, and components that require redundancy. At SKM Group, we often guide you through architectural patterns that keep essential functions alive even when lower-priority features fail. This is the heart of graceful degradation—limiting damage while preserving user trust.

A fault-tolerant system isolates failures automatically, routes around broken services, and communicates transparently with monitoring layers. It avoids catastrophic spirals by containing issues at their origin. Whether you run microservices, monoliths, or hybrid platforms, fault tolerance must be baked into the blueprint—not patched after deployment.

Implementing Chaos Engineering and Stress Testing

Chaos engineering helps you validate your system’s limits in a controlled environment. It introduces real-world disturbances—latency spikes, resource starvation, node failures—so you can observe how your application behaves under pressure. Stress testing complements this by showing you where throughput declines, where bottlenecks emerge, and how the system handles sustained overload.

We encourage you to treat chaos engineering as a routine habit rather than an occasional exercise. By regularly injecting failure into non-production environments, you uncover hidden weaknesses before they escalate. This shift trains your teams to think resiliently, respond calmly, and build systems that remain predictable when conditions deteriorate.

Drive innovation with SKM Group’s bespoke custom software development.

Utilizing Redundancy and Self-Healing Patterns

Redundancy is the architectural equivalent of insurance. It gives you backup services, backup routes, and backup infrastructure. But redundancy alone is not enough—you also need automation to activate it. This is where self-healing patterns come in. They let your system detect malfunction, spin up replacements, restart failed processes, or redirect traffic without human intervention.

These patterns do more than reduce downtime; they reduce operational strain. Instead of forcing your teams to act under pressure, your platform becomes its own first responder. Recovery starts early, escalates intelligently, and stabilizes before the incident impacts users.

Establishing Robust Recovery and Rollback Strategies

Even with the best engineering, failure will happen. Your strength lies in how quickly you can revert to stability. This requires clear rollback pathways, well-practiced recovery plans, and infrastructure optimized for rapid restoration. Your teams must know exactly what to do when a deployment misbehaves or when a service enters a degraded state.

At SKM Group, we define recovery protocols that minimize ambiguity. Whether you need a fast rollback, a partial rollback, or a full service restart, the goal is always the same: protect user experience. Strong recovery strategies reinforce your uptime, safeguard your reputation, and prevent cascading failures.

Conducting Post-Incident Reviews for Continuous Improvement

Post-incident reviews close the loop on resilience. They give you honest intelligence—what broke, why it broke, how it can be prevented, and what changes need priority. These reviews should not assign blame but uncover truth. When your team grows comfortable sharing insights without pressure, resilience improves organically.

A proper review focuses on timeline, signals, root cause, downstream impact, communication gaps, and long-term mitigation. Each review becomes a learning engine. Each action item becomes a step toward greater resilience. And over time, these incremental improvements create a system that feels almost unbreakable.

In a world defined by scale, complexity, and constant change, software resilience has become a strategic advantage—not a technical afterthought. It gives you control in uncertain conditions, turning unpredictable environments into manageable challenges. It safeguards your uptime, protects your revenue, and reinforces customer confidence.

By integrating software reliability tools, adopting software reliability standards, and measuring software reliability metrics, you build a platform that doesn’t fear disruption. And by partnering with experts who understand systemic risk—both at SKM Group and within the broader insights shaped by NCC Group—you gain a resilience roadmap tailored to your business reality.

When resilience becomes part of your engineering DNA, your systems evolve from merely functioning to thriving under pressure. That’s the difference between fragility and strength, between downtime and trust, between uncertainty and leadership.

Reliability measures the probability of failure-free operation for a period of time. Resilience measures your system’s ability to recover quickly, contain failures, and protect user experience when problems occur.

Error rates, failure frequency, MTTR, system availability levels, latency anomalies, and error budgets offer the clearest visibility into real-world uptime risk.

ISO/IEC 25010, IEC 61508, and modern SRE frameworks serve as reference points for consistency, quality assurance, and architectural discipline.

Fault tolerance, chaos engineering, redundancy, self-healing automation, graceful degradation, and structured recovery pipelines significantly raise your resilience baseline.

Need tailor-made software? We build scalable, secure solutions from scratch.

Discover more

Comments