When you think about the cloud, you probably imagine endless virtual servers humming somewhere in the background, waiting to process your business logic. But here’s the thing—you no longer have to. With serverless architecture, the cloud is shifting away from servers you provision, maintain, and scale manually. Instead, you focus entirely on the logic, while the cloud takes care of the rest. At SKM Group, we believe this paradigm isn’t just another buzzword. It’s a fundamental shift in how modern businesses will build, innovate, and grow.

If you’re hearing “serverless” for the first time, you might assume there are literally no servers involved. But that’s not the case. Servers still exist; the difference is that you never manage them yourself. The serverless computing definition boils down to this: you run applications without worrying about infrastructure. The provider provisions the servers, scales them up or down, and charges you only for execution time.

Instead of purchasing capacity and keeping it running 24/7, you pay for what you use—down to milliseconds of compute. That’s why serverless architecture in cloud computing has become such a powerful model. It lets you focus on delivering business value instead of burning time on infrastructure management.

Serverless Computing Meaning: From FaaS to BaaS

The serverless computing meaning splits into two major models. First, Function as a Service (FaaS). This is the part where you write a function, deploy it, and the cloud runs it whenever it’s triggered. Second, Backend as a Service (BaaS). Here, you integrate ready-made cloud services—like authentication, storage, or database calls—without writing your own backend logic.

Think of FaaS as the engine that runs your code, and BaaS as the modular components that accelerate development. When you combine them, you build applications faster, with fewer moving parts to maintain.

Core Concepts of Serverless Computing Technology

At the heart of serverless computing technology lie three key ideas. Applications should be stateless, meaning no function relies on previous execution context. They should be event-driven, meaning triggers like API calls, file uploads, or database changes activate them. And they should be fully managed, meaning all provisioning, patching, and scaling happen behind the scenes.

This philosophy changes not only how systems run but also how you design them. Suddenly, complexity moves away from operations and sits in business logic—where your competitive advantage lives.

How Serverless Architecture in Cloud Computing Differs from Traditional Models

Contrast this with traditional cloud models. In Infrastructure as a Service (IaaS), you rent servers and configure everything yourself. In Platform as a Service (PaaS), you still manage runtime environments but with less overhead. Serverless architecture advantages take you one step further: no servers to configure, no capacity planning, and no idle costs.

This “consumption-based” model aligns perfectly with business agility. You avoid overpaying for unused resources, while still being able to scale instantly if traffic spikes.

Secure your future with reliable IT services from SKM Group.

Role of Serverless Microservices Architecture in Modern Systems

Now think about modern enterprise applications. They’re rarely monoliths. Instead, they’re modular collections of APIs and services. That’s where serverless microservices architecture shines. Each function is a lightweight, independent component that can be deployed, scaled, and secured on its own.

This architecture matches the reality of digital-first businesses—you want flexibility, fault tolerance, and the ability to iterate fast. Microservices powered by serverless are not just a technical convenience. They’re a way to make your business more adaptable to shifting market needs.

Comparing Serverless Computing Definition Across Major Providers

Here’s where things get nuanced. AWS, Microsoft Azure, and Google Cloud each frame the serverless computing definition slightly differently. AWS Lambda popularized FaaS, while Azure Functions emphasizes tight integration with Microsoft ecosystems. Google Cloud Functions, on the other hand, focus on seamless integration with Google’s AI and data services.

Even though the definitions vary, the principle remains constant: you run your code without servers to manage. The challenge for you is to decide which ecosystem aligns best with your existing infrastructure and long-term strategy.

If you’re wondering why everyone’s talking about benefits of serverless architecture, here’s the answer. You eliminate operational complexity. You optimize costs. You achieve scale that would otherwise require a dedicated DevOps team. But the biggest advantage is agility.

With serverless, you can prototype faster, roll out features without delays, and adapt to customer demands in near real-time. The cloud provider automatically allocates resources whenever traffic grows. And when traffic stops? Costs drop to zero.

There’s also resilience built in. Since functions run independently, failure in one doesn’t crash the whole system. You also get global reach. Deploy functions close to your users with serverless edge computing, ensuring low latency and higher user satisfaction.

To summarize the impact, think of it like this:

This is why serverless architecture advantages resonate so strongly in boardrooms. It’s not about replacing servers. It’s about aligning technology with business goals.

The global leaders are clear. Amazon, Microsoft, and Google dominate the serverless computing services market. AWS Lambda remains the pioneer, Azure Functions integrates seamlessly with enterprise tools, and Google Cloud Functions positions itself as the bridge between data-driven workloads and serverless execution.

But there’s more. IBM Cloud Functions, based on Apache OpenWhisk, and Oracle Functions also offer robust solutions. Edge players like Cloudflare Workers and Fastly bring serverless edge computing closer to the user, making latency-sensitive workloads more efficient.

The choice isn’t just about features. It’s about ecosystems. If your enterprise already lives in Microsoft Office 365, Azure will feel natural. If your business runs heavy analytics, Google’s AI integrations could give you the edge. AWS, with its massive global footprint, is the safest bet for unmatched scalability.

Choosing the right serverless computing platform isn’t a purely technical decision. It’s strategic. You have to weigh performance guarantees, pricing models, compliance requirements, and integration potential. At SKM Group, we guide clients through this maze because every choice has long-term implications.

Evaluating Performance and Serverless Computing Platform SLAs

Performance in serverless is a double-edged sword. On one hand, you get automatic scaling and pay-per-use efficiency. On the other, you risk “cold starts”—the delay that happens when a function spins up after being idle. That’s why you need to evaluate not only performance benchmarks but also service-level agreements (SLAs). Does the provider commit to low latency? Do they offer options like provisioned concurrency to minimize cold starts?

These questions determine whether your mission-critical workloads will meet customer expectations.

Save resources and gain flexibility thanks to IT outsourcing.

Pricing Models: Pay-as-You-Go vs. Provisioned Concurrency

The economic side of serverless computing services is often the first thing executives notice. The pay-as-you-go model is straightforward—you pay only for the number of function executions and their duration. No idle costs, no fixed monthly bills for unused capacity. This model suits unpredictable workloads or applications that spike occasionally.

Provisioned concurrency, however, addresses performance concerns. By pre-warming a certain number of functions, you reduce cold start latency. But this comes at a fixed cost, even if the functions aren’t actively used. For businesses, the decision is a balancing act between cost efficiency and performance predictability.

Integration with CI/CD Pipelines and DevOps Workflows

If you’ve invested in DevOps culture, you’ll want your serverless computing platform to integrate seamlessly with existing CI/CD pipelines. Continuous integration ensures code changes are tested automatically. Continuous deployment ensures updates roll out without downtime.

Serverless makes this faster but also more fragmented. Each function might have its own lifecycle, and version control needs to account for hundreds of microservices. The right platform should support automation, testing, and deployment at scale—without forcing your teams to reinvent processes.

Security Features and Compliance Support

Security is non-negotiable. With serverless architecture in cloud computing, responsibility shifts. The provider secures the infrastructure, but you still handle code, identity management, and access controls. Look for platforms that provide strong encryption, least-privilege IAM policies, and compliance certifications for industries like finance or healthcare.

Serverless doesn’t reduce security risk—it transforms it. Instead of patching servers, you manage permissions, monitor APIs, and ensure functions don’t expose sensitive data. The best providers offer native security tooling, from automatic secrets management to real-time vulnerability detection.

Monitoring, Logging, and Observability Tools

Visibility can make or break your adoption journey. Since functions are short-lived and stateless, traditional monitoring tools often fall short. That’s why leading providers now offer advanced observability stacks.

You need logging to track every execution, metrics to measure latency and throughput, and tracing to pinpoint bottlenecks in complex workflows. Without this, debugging distributed serverless microservices architecture becomes a nightmare. When selecting a platform, confirm that observability is not an afterthought but a first-class feature.

Latency matters. When your users interact with digital products, milliseconds define satisfaction. That’s where serverless edge computing enters the picture. Instead of running all functions in centralized data centers, you deploy them on edge locations—closer to the user.

For you, this means faster responses, higher availability, and compliance with regional data regulations. For example, if your application serves both European and North American markets, edge computing ensures both audiences experience consistent performance.

More importantly, edge computing isn’t just about speed. It’s about resilience. When functions run at multiple edge points, you reduce the risk of outages from regional failures. In industries where downtime equals lost revenue, this is critical.

Timing is everything. Adopting serverless microservices architecture makes sense when agility outweighs predictability. If your application experiences unpredictable workloads, or if you’re scaling a product with global demand, serverless will reduce costs and complexity.

But if your workloads are steady and require persistent connections—like real-time gaming backends or high-frequency trading—traditional models may still be more efficient. The adoption curve depends on your business case. At SKM Group, we advise clients to start small: migrate a single service or API to serverless, measure results, and scale adoption gradually.

Every technology shines brightest when implemented with discipline. To fully capture serverless architecture advantages, you need proven practices.

Designing Stateless Functions and Event-Driven Components

Serverless thrives on statelessness. Each function should be independent, handling a request without relying on stored session data. To maintain state, offload it to external services like databases or queues. Pair this with an event-driven design, where triggers like API calls or file uploads activate functions. This ensures scalability and modularity.

Mitigating Cold Starts and Optimizing Performance

Cold starts remain the most common performance issue. To mitigate them, keep functions lightweight, minimize dependencies, and use provisioned concurrency for critical workloads. Optimization also involves designing functions that execute quickly—millisecond efficiency translates directly into lower costs and better user experience.

Implementing Robust Security and Least-Privilege IAM

Security in serverless architecture is about precision. Apply least-privilege access, ensuring each function can do only what it needs—nothing more. Use environment variables for secrets, rotate keys automatically, and audit logs regularly. The shared responsibility model means your vigilance is as important as the provider’s.

Automating Deployment with Infrastructure-as-Code

When hundreds of functions run in parallel, manual deployment isn’t sustainable. Infrastructure-as-Code (IaC) tools like AWS SAM, Terraform, or Pulumi automate the process. IaC reduces errors, accelerates rollouts, and ensures consistency across environments. For enterprises, it’s the difference between controlled growth and chaotic sprawl.

Cost Monitoring and Serverless Architecture Advantages Management

The pay-as-you-go model is powerful—but without monitoring, it can surprise you. Track usage, set alerts, and review billing dashboards. Map costs directly to business units or features. When you tie expenses to value creation, you not only optimize budgets but also gain transparency into ROI.

Build software that adapts to your business, not the other way around: custom software development.

Serverless isn’t a fad. It’s a natural evolution of cloud. By removing the burden of managing servers, it frees you to focus on outcomes, not infrastructure. The benefits of serverless architecture—agility, scalability, cost alignment, and resilience—position it as the backbone of future innovation.

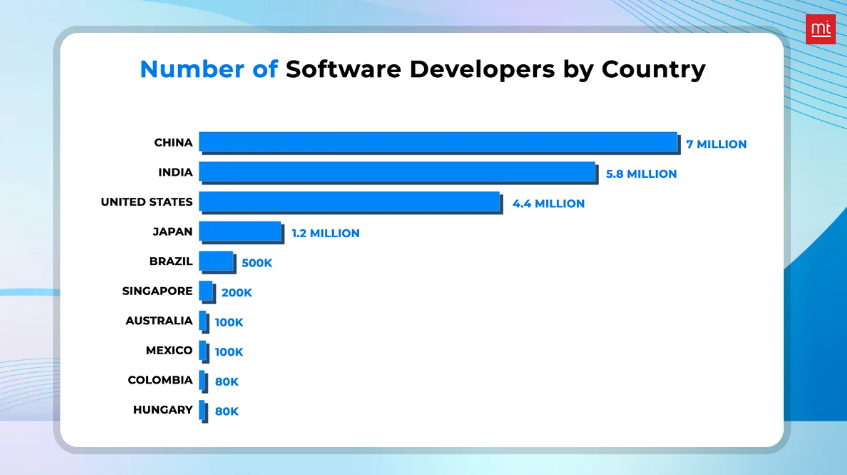

The infographic

At SKM Group, we’ve seen firsthand how businesses accelerate growth with serverless computing technology. From startups seeking rapid market entry to enterprises modernizing legacy systems, the shift is universal. If your goal is to build smarter, faster, and leaner, then serverless architecture in cloud computing isn’t just an option. It’s your next strategic move.

Traditional models require you to provision and manage servers. Serverless computing definition eliminates this responsibility—your provider runs the infrastructure, and you pay only for execution time.

They align costs with usage. You avoid paying for idle servers and scale expenses directly with demand.

Not directly. Serverless is designed for stateless functions. For stateful needs, you integrate external storage or databases.

Latency-sensitive applications—such as content delivery, IoT, and real-time analytics—gain the most from serverless edge computing.

AWS leads with the largest global footprint, but Azure and Google also offer wide regional availability. The right choice depends on where your users are located.

The dependency is real. Every vendor uses proprietary runtime environments and integrations. To minimize risk, design features to be portable and consider multi-cloud strategies where possible.

Need tailor-made software? We build scalable, secure solutions from scratch.

Discover more

Comments